27.05.2025

27.05.2025

27.05.2025

27.05.2025

Want to build better software? Software quality metrics provide a quantifiable way to assess and improve your codebase. This listicle outlines eight essential software quality metrics, from code coverage and cyclomatic complexity to customer satisfaction and technical debt. Understanding these metrics empowers you to make informed decisions, reduce development costs, and deliver high-quality software that meets user needs. This knowledge is critical for success in the competitive software market. We'll explore each metric and its importance for businesses like yours in the UK.

Code coverage is a fundamental software quality metric that measures the percentage of your source code executed during automated testing. It provides valuable insights into the effectiveness of your testing strategy by highlighting how much of your codebase is actually being exercised by tests. This helps identify potentially buggy, untested areas, ultimately contributing to higher quality and more reliable software. In essence, it answers the question: "How much of our code have we actually tested?"

This metric isn't just about counting lines of code; it explores different levels of coverage to provide a comprehensive view of test thoroughness. Several types of code coverage exist, each offering a different perspective:

Statement coverage (Line coverage): The most basic type, measuring the number of lines of code executed during tests.

Branch coverage (Decision coverage): Measures whether both the true and false branches of conditional statements (if/else) are tested.

Function coverage: Tracks whether each function or method in the codebase has been called during testing.

Path coverage: The most complex, analyzing every possible execution path through the code.

Modern code coverage tools offer seamless integration with Continuous Integration/Continuous Delivery (CI/CD) pipelines, providing real-time reports and visualizations of coverage data. This allows developers to monitor trends, identify regressions, and address coverage gaps promptly.

Code coverage offers several advantages:

Pinpoints Untested Code: It clearly identifies gaps in your testing strategy by highlighting areas of the codebase that haven't been executed during tests.

Quantitative Assessment: Provides a measurable and quantifiable way to assess the thoroughness of your testing efforts.

Regression Prevention: By ensuring that all parts of the codebase are tested, code coverage helps prevent regressions, or the reintroduction of previously fixed bugs.

Compliance Support: In regulated industries, demonstrating adequate code coverage is often a requirement for compliance.

Easy Communication: The concept of code coverage is straightforward and easy to explain to stakeholders, facilitating clear communication about testing effectiveness.

However, relying solely on code coverage has its drawbacks:

Quality vs. Quantity: High code coverage doesn't necessarily equate to high-quality tests. Tests might execute code without effectively checking for errors.

Metric-Driven Development: An overemphasis on code coverage can encourage developers to write superficial tests just to inflate the numbers, rather than focusing on meaningful test cases.

Missed Logic Errors: Even covered code can contain logic errors that go undetected if tests aren't designed to catch them.

Unrealistic 100%: Achieving 100% code coverage is often impractical, unnecessary, and can be detrimental to development velocity.

Development Bottleneck: Over-emphasizing coverage can slow down the development process as developers spend excessive time writing tests for trivial code sections.

Several industry giants successfully leverage code coverage to improve their software quality: Google maintains over 85% code coverage across most projects, Netflix uses it to ensure the reliability of its streaming services, Microsoft mandates minimum coverage thresholds for critical components, and Spotify uses coverage data to prioritize testing efforts.

Code coverage is an important metric, but it's not the only one. For a broader view of code quality, consider tracking these: key software quality metrics from Pull Checklist.

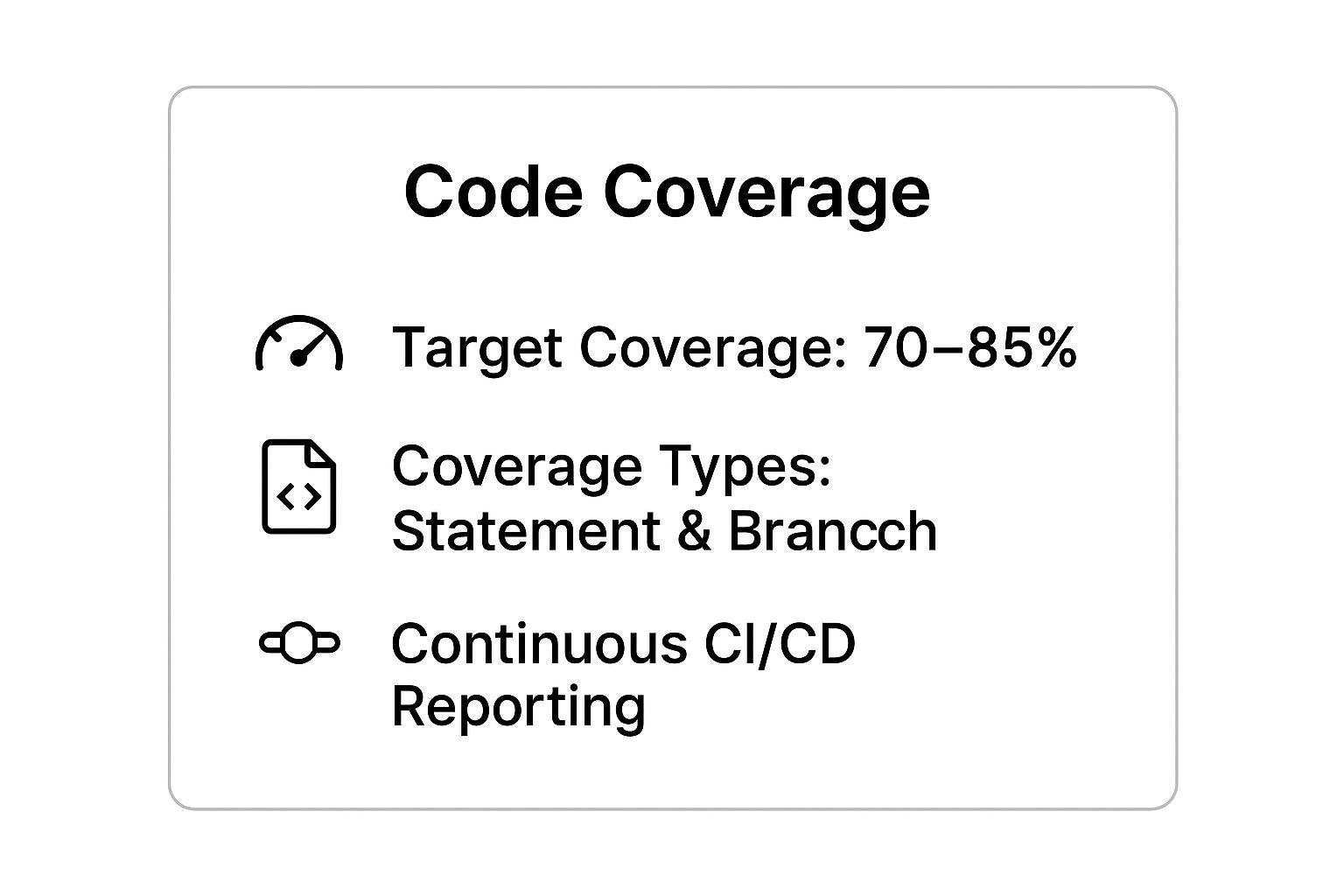

Here's a quick reference summary of key takeaways regarding Code Coverage:

This infographic highlights three essential aspects of code coverage: aiming for a practical target range (70-85%), focusing on critical coverage types (statement and branch), and integrating coverage reporting into the continuous CI/CD pipeline for ongoing monitoring. As the infographic suggests, continuous monitoring and appropriate target setting are key for effective use of this metric.

Here are a few tips for effectively using code coverage:

Realistic Targets: Aim for 70-85% coverage for most projects, prioritizing critical business logic.

Prioritize Critical Paths: Focus on testing critical and complex sections of the codebase first.

Gap Analysis: Use coverage reports to identify gaps in testing and guide the creation of new test cases, rather than treating it as the sole measure of quality.

Combine with Other Metrics: Consider combining code coverage with other metrics like mutation testing for deeper insights into test effectiveness.

Context-Specific Targets: Tailor your coverage targets to the specific criticality and risk profile of your project. A life-critical system may require higher coverage than a less critical application.

Code coverage, when used strategically and in conjunction with other quality metrics, provides a powerful mechanism for improving the quality, reliability, and maintainability of your software. It’s a valuable tool for any business aiming to deliver high-quality products to the UK market.

Cyclomatic complexity is a crucial software quality metric that provides a quantitative measure of code complexity. It helps developers, project managers, and business owners understand the intricacy of their software, predict potential maintenance costs, and ultimately improve the quality and maintainability of their codebase. In essence, it tells you how many different paths of execution exist within a piece of code, directly relating to the number of tests required to achieve full path coverage. This metric, developed by Thomas McCabe Sr. in 1976, is language-independent and based on sound mathematical principles derived from graph theory, making it a valuable tool for any software development project.

Cyclomatic complexity works by analysing the control flow graph of a program. This graph represents the code's structure, with nodes representing blocks of sequential code and edges representing possible execution paths between those blocks. The more branches and decision points (like if statements, for and while loops, case statements, and conditional operators) within the code, the higher the cyclomatic complexity. This is calculated by counting these decision points and applying a simple formula.

The benefits of tracking and managing cyclomatic complexity are numerous. High complexity scores often indicate code that is difficult to understand, test, and maintain. This can lead to increased development time, higher bug rates, and difficulty in implementing changes or adding new features. By keeping cyclomatic complexity low, development teams can improve code readability, reduce the risk of defects, and simplify future maintenance efforts. Furthermore, cyclomatic complexity can be used to estimate testing effort, as it directly relates to the number of test cases required for full path coverage. This helps in resource planning and ensures adequate testing resources are allocated.

Examples of successful implementation of cyclomatic complexity checks are widespread in the industry. NASA, known for its rigorous software quality standards, mandates a cyclomatic complexity score below 10 for its mission-critical flight software. Microsoft incorporates complexity metrics into its Windows development process. Even open-source projects like the Linux kernel consider complexity during patch reviews. Financial institutions in the UK and globally often utilize cyclomatic complexity analysis as part of their regulatory compliance efforts, demonstrating the metric's wide acceptance and importance.

For business owners, understanding and managing cyclomatic complexity can translate directly to cost savings and improved product quality. By incorporating complexity analysis into the development process, you can identify areas of potential risk early on, reduce testing and maintenance costs, and ensure the development of robust, maintainable software. This ultimately leads to faster time to market, improved customer satisfaction, and a stronger competitive edge.

Here are some actionable tips for implementing and utilizing cyclomatic complexity in your software development process:

Keep functions below a complexity score of 10: This is a generally accepted best practice and helps ensure code clarity and maintainability.

Refactor functions with scores above 15: These functions are likely overly complex and should be broken down into smaller, more manageable units.

Use early returns to reduce nesting: This simplifies the control flow and makes the code easier to follow.

Break complex functions into smaller, more focused functions: This improves modularity and reduces the overall complexity of individual functions.

Consider complexity when designing algorithms: Choose algorithms and data structures that are efficient and minimize complexity where possible.

Integrate complexity analysis into your CI/CD pipeline: Automate the process to ensure regular monitoring and enforcement of complexity thresholds.

While cyclomatic complexity is a powerful metric, it's important to understand its limitations. It doesn’t account for code readability factors like naming conventions or code style. It can also penalize necessarily complex algorithms, especially in domains like cryptography or scientific computing. Furthermore, it doesn't measure data complexity or reflect the actual cognitive complexity a developer might experience. Therefore, while cyclomatic complexity should be a key consideration, it should be used in conjunction with other quality metrics and best practices to provide a comprehensive assessment of software quality. For example, code reviews remain essential for evaluating aspects like readability and design, which are not captured by complexity metrics alone.

Defect density is a crucial software quality metric that provides valuable insights into the health and maintainability of your software projects. It measures the concentration of defects relative to the size of the software, offering a quantifiable way to assess and compare quality across different modules, releases, or even entire projects. Understanding and effectively using defect density can significantly contribute to improved software quality, reduced development costs, and increased customer satisfaction. This metric deserves its place on the list of essential software quality metrics due to its ability to provide objective comparisons, identify problematic areas, and support data-driven decision-making.

Defect density is typically expressed as the number of defects per thousand lines of code (KLOC) or defects per function point. While KLOC is a more common measure, function points offer a more abstract and arguably more accurate representation of software size, as they are less susceptible to variations in coding style. The calculation itself is straightforward: simply divide the total number of confirmed defects found during a specific period (e.g., testing phase, post-release) by the size of the software in KLOC or function points.

How and Why to Use Defect Density:

Defect density serves multiple purposes throughout the software development lifecycle. During development, it helps identify modules or components with a higher concentration of defects, allowing development teams to focus their testing and debugging efforts. This targeted approach optimizes resource allocation and ensures that problematic areas receive the necessary attention. Tracking defect density across different releases helps monitor quality trends over time, enabling proactive identification of potential issues and informing process improvement initiatives. Furthermore, defect density can be a crucial input for release readiness decisions, providing objective data to assess the risk associated with deploying a new software version.

Features and Benefits:

Normalization: Defect counts are normalized by code size, enabling meaningful comparisons between projects of different scales and complexities.

Cross-Project Comparison: Facilitates benchmarking and comparison of software quality across different teams, projects, and even organisations.

Trend Analysis: Tracks quality trends over time, revealing improvements or regressions in the development process.

Release Readiness: Supports data-driven decisions regarding the suitability of a software release.

Integration with Defect Tracking: Seamlessly integrates with defect tracking systems to automate data collection and reporting.

Industry Benchmarking: Enables comparison against industry averages and best practices.

Examples of Successful Implementation:

Several industry leaders demonstrate the power of defect density as a key quality indicator. IBM reports an industry average of 15-50 defects per KLOC, highlighting the wide range of quality levels across different software development practices. Microsoft, renowned for its rigorous quality control, reportedly achieves less than 0.5 defects per KLOC in its shipping code for Windows. Furthermore, highly disciplined methodologies like Cleanroom software development aim for less than 1 defect per KLOC, while critical systems like NASA space software maintain an exceptionally low defect density of less than 0.1 defects per KLOC. These examples showcase the potential for significant quality improvements through focused effort and robust development processes.

Pros and Cons of Using Defect Density:

While defect density offers valuable insights, it's essential to be aware of its limitations.

Pros:

Objective Comparison: Enables objective comparison of software quality.

Problem Identification: Helps pinpoint problematic code areas requiring attention.

Predictive Modelling: Supports predictive quality modelling and resource allocation.

Industry Benchmarks: Provides a basis for comparison against industry best practices.

Cons:

Accurate Classification: Requires accurate and consistent defect classification.

Code Size Limitations: Lines of code can be a misleading measure of software size, particularly for complex systems.

Complexity: May not adequately account for differences in code complexity.

Gaming the System: Can potentially encourage writing more code to artificially improve ratios.

Severity Weighting: Different defect severity levels need appropriate weighting for a more nuanced analysis.

Actionable Tips for Business Owners:

Track by Severity and Origin: Track defects by severity and origin to identify specific areas for improvement.

Consistent Classification: Establish and enforce consistent defect classification criteria across your development teams.

Consider Function Points: Consider using function points for size normalization to account for code complexity.

Monitor Trends: Focus on monitoring trends in defect density rather than absolute values.

Correlate with Customer Feedback: Correlate defect density with customer-reported issues to validate its effectiveness.

By understanding the nuances of defect density and implementing these practical tips, businesses in the UK can leverage this powerful metric to improve software quality, reduce development costs, and deliver exceptional customer experiences. While no single metric perfectly captures all aspects of software quality, defect density remains a valuable tool in the pursuit of robust and reliable software.

Mean Time Between Failures (MTBF) is a crucial software quality metric that quantifies the average time elapsed between system failures during normal operation. It provides a valuable measure of a system's reliability and stability, enabling businesses to predict potential downtime, proactively plan maintenance, and set realistic customer expectations. In the competitive UK business landscape, where digital services are often mission-critical, understanding and optimising MTBF is essential for maintaining a positive user experience and ensuring business continuity. This metric deserves its place on any list of essential software quality metrics because it offers a direct link between system performance and business impact.

MTBF primarily focuses on the operational reliability of a system. By tracking failure patterns over time, it helps identify weaknesses and predict potential future failures. This information supports predictive maintenance planning, allowing businesses to schedule downtime proactively and minimise disruption to users. Moreover, MTBF allows for the definition and monitoring of Service Level Agreements (SLAs), providing a quantifiable measure of performance for both internal teams and external customers. This data seamlessly integrates with incident management systems, providing valuable context for post-incident analysis and continuous improvement efforts. Finally, MTBF provides a clear, customer-facing quality metric that demonstrates a commitment to reliability and service stability.

The calculation of MTBF involves dividing the total operational time by the number of failures observed during that period. For example, if a system runs for 1,000 hours and experiences two failures, the MTBF would be 500 hours. It’s important to note that MTBF specifically measures the time between failures, not the time to repair a failure. That’s a separate metric known as Mean Time To Repair (MTTR).

Several successful implementations of MTBF highlight its value in different sectors. Amazon, for example, boasts an impressive 99.99% uptime, translating to approximately 52 minutes of downtime per year. This level of reliability is critical for maintaining their vast e-commerce operations and meeting customer expectations. Similarly, Google Cloud maintains a 99.95% availability SLA, demonstrating their commitment to providing a stable platform for businesses. Within the UK, banking systems often target 99.9% uptime (8.76 hours downtime per year), recognizing the critical importance of continuous service availability for financial transactions. Even higher availability targets are common in the telecoms sector, where networks aim for "five nines" (99.999%) availability, equivalent to just over 5 minutes of downtime per year.

To effectively utilize MTBF, consider these actionable tips:

Define "failure" clearly and consistently: Establish precise criteria for what constitutes a system failure. This ensures accurate data collection and consistent reporting.

Exclude planned maintenance from calculations: MTBF focuses on unplanned downtime. Exclude scheduled maintenance periods to avoid skewing the results.

Track different failure categories separately: Categorising failures (e.g., hardware, software, network) provides more granular insights and allows for targeted improvements.

Use statistical confidence intervals: MTBF is a statistical measure, and confidence intervals help understand the margin of error associated with the calculated value.

Correlate with customer impact metrics: Linking MTBF with metrics like customer satisfaction or lost revenue helps understand the real-world impact of system failures.

While MTBF provides valuable insights, it’s important to acknowledge its limitations. It requires long observation periods to achieve accurate results. Furthermore, MTBF may not fully capture the severity of different failures – a short outage affecting critical functionality could have a greater impact than a longer outage affecting a less critical component. External factors, such as power outages or network disruptions, can also influence MTBF. Finally, MTBF doesn't inherently distinguish between different failure types or directly reflect the real user impact. Therefore, it’s crucial to use MTBF in conjunction with other software quality metrics to gain a comprehensive understanding of system performance.

MTBF has a rich history, originating in military reliability engineering standards. Its use expanded through the telecommunications industry, particularly with the Bell System, and became further embedded in aerospace industry standards. Today, MTBF remains a key component of best practice frameworks like the IT Infrastructure Library (ITIL), highlighting its ongoing relevance for modern IT operations. For UK businesses seeking to improve their software quality and deliver reliable services, MTBF provides a valuable tool for measuring, managing, and optimising system reliability.

Technical debt is a crucial software quality metric that quantifies the implied cost of rework caused by choosing expedient, "quick-and-dirty" solutions during software development instead of more robust, albeit time-consuming, approaches. It essentially represents the cost of cutting corners. Originally coined by Ward Cunningham, the metaphor compares accumulating code quality issues to accruing financial debt. Just as financial debt accrues interest, technical debt accumulates "interest" in the form of increased development time and costs down the line. Modern tools estimate technical debt in terms of remediation time or cost, providing a tangible measure for this often-hidden cost. This allows businesses to understand the financial implications of technical decisions and make more informed choices.

Technical debt arises from various factors, including tight deadlines, lack of clear requirements, insufficient testing, and simply prioritizing speed over quality. While taking on some technical debt might be strategically acceptable in certain situations, such as meeting a critical market launch date, uncontrolled accumulation can cripple a project over time, leading to increased bug counts, reduced development velocity, and ultimately, higher costs. This is why tracking and managing technical debt is essential for long-term software project success. This metric allows teams to understand the state of their codebase and make informed decisions about refactoring and future development.

Technical debt measurement tools offer a range of features, including quantifying code quality issues by assigning severity levels, estimating the effort required for remediation, tracking debt accumulation over time to identify trends, categorizing debt by type (e.g., code complexity, code duplication, lack of documentation), and integrating with static analysis tools for automated assessment. Many also support prioritization decisions by highlighting the most impactful areas to address. This allows development teams in the UK to focus their efforts on the most critical issues.

Technical debt's inclusion in software quality metrics is crucial for several reasons. First, it makes quality issues visible to management, translating technical jargon into the language of business—time and money. This enables informed discussions about resource allocation and prioritization. Second, it supports data-driven refactoring prioritization, ensuring that the most impactful code areas are addressed first. Third, it facilitates planning for quality improvements by budgeting time and resources specifically for debt reduction. Finally, it provides a concrete business case for addressing quality issues, justifying the investment required for refactoring and code improvements.

Several successful companies demonstrate the value of managing technical debt. SonarQube reports indicate that typical enterprise applications have accumulated over 30 days of technical debt. Companies like Stripe regularly allocate a significant portion (e.g., 20%) of their development time to debt reduction, recognizing the long-term benefits of maintaining a healthy codebase. Similarly, Shopify measures and reports technical debt in their quarterly reviews, demonstrating their commitment to code quality. LinkedIn tracks debt ratios across all engineering teams, fostering a culture of quality and continuous improvement.

To effectively manage technical debt, consider the following tips: Set target debt ratios (typically less than 5%) to keep debt under control. Allocate regular time for debt reduction, just as Stripe does, to prevent it from becoming overwhelming. Focus on high-impact, high-frequency code areas, maximizing the return on investment for refactoring efforts. Use automated tools for consistent measurement and tracking. Finally, and crucially, communicate debt in business terms (time and cost) to ensure buy-in from stakeholders. Learn more about Technical Debt and explore strategies for managing it effectively.

While technical debt provides valuable insights, it’s crucial to acknowledge its limitations. Estimation accuracy can vary significantly depending on the tools and methodologies used. Some types of debt, such as architectural debt or design debt, might be difficult to capture quantitatively. Furthermore, assessment of certain aspects of technical debt can be subjective. Implementing and maintaining tools for measuring technical debt requires an investment in both resources and training. Finally, an overemphasis on technical debt metrics can overwhelm teams with too much information, obscuring more critical development goals. Therefore, a balanced approach that considers the context and specific needs of the project is essential.

Customer Satisfaction Score (CSAT) is a crucial software quality metric that measures user satisfaction with the software's quality, functionality, and overall experience. While other software quality metrics might focus on internal technical aspects, CSAT provides invaluable external validation by directly capturing the user's perspective. It bridges the gap between technical excellence and perceived value, answering the critical question: How happy are our users with our software? This makes CSAT an essential metric for any business owner in the UK looking to deliver high-quality software products.

CSAT typically involves asking users a direct question like, “How satisfied are you with [Software/Specific Feature]?” using a scale, often ranging from 1 (Very Dissatisfied) to 5 (Very Satisfied). The responses are then averaged to calculate the overall CSAT score. This straightforward approach makes it easy to understand and communicate the results across teams and stakeholders.

Beyond simple surveys, CSAT can be gathered through multiple channels, offering a holistic view of user sentiment. These channels include app store ratings (particularly relevant in today’s mobile-first world), feedback forms embedded within the software, support ticket sentiment analysis, and even social media monitoring. This multi-channel approach allows businesses to capture a broader range of user experiences.

The benefits of tracking CSAT are numerous. It reflects the real user experience, highlighting areas where the software excels and where it falls short. This user-centric approach drives business-relevant quality improvements by focusing on aspects that directly impact user satisfaction. A high CSAT score often correlates with increased user retention, positive word-of-mouth referrals, and ultimately, stronger business performance. Furthermore, CSAT supports product decision-making by providing data-backed insights into user preferences and needs. It also validates technical quality investments by demonstrating the tangible impact of those investments on user satisfaction.

Several successful companies highlight the power of prioritising CSAT. Apple, known for its focus on user experience, consistently maintains a high average App Store rating (4.5+ stars) through rigorous quality assurance and a commitment to user feedback. Similarly, Slack has achieved high customer satisfaction scores (e.g., 93%) by investing in reliability and performance, ensuring a seamless communication experience for its users. Zoom’s journey from a low 2.0-star rating to a respectable 4.4-star rating exemplifies how addressing performance and usability issues based on user feedback can drastically improve CSAT. GitHub, a platform used by millions of developers, correlates CSAT with system availability metrics, demonstrating the direct link between technical performance and user satisfaction.

Despite its advantages, CSAT has certain limitations. External factors unrelated to software quality, such as marketing campaigns or competitor actions, can influence user sentiment. Response bias, inherent in any feedback collection method, can skew the results. Furthermore, feedback can be delayed, making it challenging to identify the impact of recent quality changes immediately. CSAT also may not pinpoint specific technical issues; it provides an overview of user sentiment but might not offer granular diagnostics. Finally, CSAT scores can exhibit seasonal and contextual variations, requiring careful interpretation.

To effectively utilise CSAT, consider these actionable tips:

Correlate CSAT with technical metrics: Connecting CSAT with metrics like system uptime, error rates, and load times can reveal valuable insights into the technical drivers of user satisfaction.

Use multiple feedback channels: Diversifying feedback sources ensures a comprehensive understanding of user sentiment.

Track satisfaction trends over releases: Monitoring CSAT trends helps assess the impact of software updates and identify areas for continuous improvement.

Segment feedback by user characteristics: Analyzing feedback by user demographics, usage patterns, or subscription tiers can uncover specific needs and preferences.

Act quickly on quality-related complaints: Promptly addressing negative feedback demonstrates a commitment to user satisfaction and can mitigate potential churn.

The rise of CSAT as a key metric is closely linked to the Net Promoter Score methodology, the growing emphasis on Customer Experience Management, app store rating systems, and SaaS customer success practices. These trends underscore the increasing importance of user-centric approaches to software development.

Learn more about Customer Satisfaction Score (CSAT)

By incorporating CSAT into your software quality management process, you gain a powerful tool for understanding user needs, driving targeted improvements, and ultimately, delivering software that delights users and contributes to business success in the competitive UK market. Focusing on CSAT ensures you are building software that not only meets technical specifications but also resonates with the people who use it, creating a positive user experience that translates into tangible business value.

Code churn rate, a crucial software quality metric, measures the percentage of a codebase that is modified, added, or deleted within a specific timeframe. This metric provides valuable insights into the stability and maintainability of your software, helping you identify potential problem areas and allocate resources effectively. Tracking code churn is essential for any business owner investing in software development, as it directly impacts the long-term cost and quality of the product. Its inclusion in this list of essential software quality metrics stems from its ability to predict defect-prone areas, inform architectural decisions, and ultimately improve the development process.

Code churn is calculated by analysing the changes made to the codebase within a given period, typically measured in lines of code modified, added, or deleted. This data is readily available through version control systems like Git, making the metric relatively easy to track and automate. The churn rate itself can be represented as a percentage of the total codebase or as an absolute number of changes. For example, if 1,000 lines of code are changed in a 10,000-line codebase, the churn rate is 10%. This percentage can be tracked at various granularities, from individual files and modules to the entire system, offering a comprehensive view of development activity.

Understanding how code churn works in practice is key to leveraging its benefits. Imagine a scenario where a particular module consistently exhibits high churn. This could indicate several underlying issues: unstable requirements leading to frequent rework, a poorly designed module requiring constant patching, or a section of the codebase that's particularly complex and error-prone. By identifying these high-churn areas, development teams can prioritize code reviews, allocate additional testing resources, and consider refactoring to improve long-term stability and reduce future development costs.

Several successful implementations of code churn analysis demonstrate its value. Microsoft research, spearheaded by Nachiappan Nagappan, found a strong correlation between high churn rates and defect density. Files experiencing more than 10 changes per month were found to have twice the defect rate of less frequently modified files. This insight allows businesses to proactively address quality issues by focusing on high-churn areas. Similarly, Google utilizes churn metrics to identify potential candidates for refactoring, streamlining their codebase and improving maintainability. Facebook, too, has found correlations between code churn and the thoroughness of code reviews, leading to adjustments in their review processes. Netflix leverages churn rate monitoring across their microservices architecture to ensure stability and identify potential performance bottlenecks.

Here are some actionable tips for incorporating code churn analysis into your software development process:

Monitor trends, not just absolute values: A sudden spike in churn rate is more concerning than a consistently high but stable churn. Track churn over time to identify unusual patterns and potential issues.

Investigate high-churn files: Don't just observe the metric; delve into the reasons behind high churn. Is it due to new feature development, bug fixes, or refactoring? Understanding the context is crucial.

Correlate churn with defect rates: Track both metrics in parallel to identify areas where high churn translates into increased bug counts. This will pinpoint areas requiring immediate attention.

Consider the context: High churn may be perfectly acceptable during periods of intense feature development. Differentiate between churn related to new features and churn caused by fixing bugs or instability.

Guide testing resource allocation: Direct more testing resources towards modules experiencing high churn, as they are statistically more likely to contain defects.

While code churn is a powerful metric, it's important to be aware of its limitations:

High churn isn't always bad: New features or significant refactoring efforts naturally lead to higher churn. Don't automatically assume high churn indicates a problem.

Doesn't distinguish between good and bad changes: Churn simply measures the volume of changes, not their quality. A large refactoring that improves code quality will still register as high churn.

Can discourage necessary refactoring: Developers might be hesitant to refactor code if they fear increasing churn metrics, even if it's beneficial in the long run.

Can penalize actively developed modules: Modules undergoing constant development and improvement will naturally have higher churn than more stable modules.

By understanding the nuances of code churn rate, its benefits, and its limitations, business owners in the UK can leverage this powerful metric to improve software quality, predict potential problem areas, and make informed decisions about resource allocation. This proactive approach to software development ultimately leads to more stable, maintainable, and cost-effective software products.

Performance metrics are crucial software quality metrics that directly impact user satisfaction, business revenue, and overall system stability. They provide quantifiable insights into how efficiently and effectively a software application performs under various conditions. These metrics encompass response time, throughput, and resource utilisation, providing a holistic view of system performance. This makes them essential for any business owner invested in delivering high-quality software solutions.

Understanding the Core Components:

Response Time: This measures the duration a system takes to respond to a user request. A lower response time translates to a snappier and more satisfying user experience. It’s typically measured in milliseconds (ms) and can vary based on the complexity of the request and the current system load. For web applications, a common target is to keep response times under 200ms for most interactions.

Throughput: This metric quantifies the number of transactions or operations a system can process within a specific timeframe. Higher throughput indicates greater processing capacity and efficiency. It's typically measured in transactions per second (TPS) or requests per second (RPS). A high throughput is crucial for applications handling large volumes of data or user requests, such as e-commerce platforms or online gaming servers.

Resource Utilisation: This examines how effectively a system utilizes its underlying resources, including CPU, memory, disk I/O, and network bandwidth. Monitoring resource utilisation helps identify bottlenecks and optimise resource allocation. High resource utilisation, especially sustained over time, can lead to performance degradation and system instability.

Why Performance Metrics Matter for Software Quality:

Poor performance often masks underlying issues within the software's codebase, architecture, or infrastructure. Slow response times, low throughput, and excessive resource consumption can negatively impact user experience, leading to frustration and potentially lost business. By diligently tracking and analysing performance metrics, businesses can proactively identify and address these problems, ensuring a smooth and efficient user experience.

Features of Robust Performance Monitoring:

Effective performance monitoring relies on a combination of features, including multi-dimensional performance measurement, real-time monitoring capabilities, load testing integration, percentile-based analysis (e.g., P95, P99), resource bottleneck identification, and performance regression detection. These features allow for a comprehensive understanding of system performance and enable targeted optimisation efforts.

Pros of Utilizing Performance Metrics:

Direct Impact on User Experience: Improved performance directly translates to a better user experience, leading to increased user satisfaction and retention.

Enables Capacity Planning: Performance metrics inform capacity planning decisions, ensuring the system can handle future growth and demand.

Identifies Optimisation Opportunities: By pinpointing performance bottlenecks, these metrics guide optimization efforts, leading to more efficient resource utilisation.

Supports SLA Monitoring: Performance metrics are essential for monitoring service level agreements (SLAs) and ensuring service quality.

Facilitates Performance Tuning: These metrics provide the data needed to fine-tune system parameters and achieve optimal performance.

Cons of Relying Solely on Performance Metrics:

Influence of External Factors: External factors like network latency or third-party service performance can impact measurements.

Sophisticated Monitoring Infrastructure: Implementing comprehensive performance monitoring often requires investment in monitoring tools and infrastructure.

Real User Conditions: Simulated tests may not fully replicate real-world user behaviour and network conditions.

Performance vs. Functionality Trade-offs: Optimising for performance sometimes necessitates trade-offs with functionality.

Environment-Dependent Measurements: Performance can vary across different environments (development, testing, production).

Examples of Successful Implementation:

Industry giants like Google, Amazon, Netflix, and Facebook prioritize performance metrics. Google aims for sub-100ms search response times, while Amazon understands that even a 100ms latency increase can impact sales. Netflix focuses on maintaining low 99th percentile response times, and Facebook handles millions of requests per second with minimal latency. These examples highlight the importance of performance in achieving business success.

Actionable Tips for Business Owners:

Monitor Percentiles, Not Just Averages: Focus on higher percentiles (e.g., P95, P99) to understand the experience of the majority of users.

Test Under Realistic Load Conditions: Simulate real-world user behaviour and load patterns during testing.

Set Performance Budgets for New Features: Establish performance targets for new features to prevent performance regressions.

Use Synthetic Monitoring for Early Detection: Implement synthetic monitoring to proactively identify performance issues before they impact users.

Correlate Performance with Business Metrics: Link performance metrics to business outcomes to demonstrate the impact of performance on revenue and user engagement.

Learn more about Performance Metrics (Response Time, Throughput, Resource Utilization)

Performance metrics are indispensable software quality metrics for any business operating in the UK or globally. By understanding and effectively utilizing these metrics, businesses can ensure optimal software performance, leading to increased user satisfaction, improved business outcomes, and a competitive edge in the market. These metrics deserve a prominent place in any software quality evaluation process, ensuring the delivered software meets user expectations and business goals.

| Metric | Implementation Complexity 🔄 | Resource Requirements ⚡ | Expected Outcomes 📊 | Ideal Use Cases 💡 | Key Advantages ⭐ |

|---|---|---|---|---|---|

| Code Coverage | Moderate – requires test integration and CI/CD setup | Medium – needs test infrastructure and reporting tools | Quantitative test completeness, identifies untested code areas | Automated testing quality assurance, regulatory compliance | Clear coverage insight, supports regression prevention |

| Cyclomatic Complexity | Low to Moderate – static code analysis integration | Low – analysis tools with minimal runtime overhead | Objective code complexity measure, predicts testing effort | Code review prioritization, refactoring decisions | Identifies complex code, enforces coding standards |

| Defect Density | Low – defect tracking integration and size measurement | Low to Medium – defect database and size metrics | Quality benchmarking, defect-prone area identification | Release readiness, quality benchmarking | Objective quality comparison, predictive insights |

| Mean Time Between Failures (MTBF) | Moderate – requires long-term failure data collection | Medium to High – monitoring and incident systems | Reliability measure, maintenance planning | Mission-critical systems, SLA monitoring | Direct user impact metric, supports business continuity |

| Technical Debt | Moderate to High – requires static analysis and estimation tools | Medium – static code analysis and expert input | Quantifies remediation effort, tracks quality debt | Quality improvement planning, refactoring prioritization | Makes hidden quality issues visible, aids management decisions |

| Customer Satisfaction Score (CSAT) | Low – survey and feedback system integration | Low to Medium – multiple feedback channels and analytics | User-perceived quality, satisfaction trends | Product feedback, user experience measurement | Reflects real user experience, drives business improvements |

| Code Churn Rate | Low – VCS data extraction and analysis | Low – version control system data | Identifies unstable code, predicts defect-prone areas | Architectural risk assessment, code review focus | Easy to calculate, guides testing and refactoring |

| Performance Metrics | Moderate to High – requires monitoring and load testing setup | High – infrastructure for real-time monitoring and testing | Measures speed, throughput, resource use, detects bottlenecks | Scalability planning, performance tuning, SLA enforcement | Directly impacts user experience, enables optimization |

Software quality metrics, from code coverage and cyclomatic complexity to defect density and MTBF, provide a crucial window into the health and performance of your software. By understanding and actively monitoring these metrics, including customer-focused ones like CSAT and technical debt, you can identify areas for improvement, predict potential issues before they impact your users, and ultimately deliver a superior product. Mastering these concepts empowers you to make data-driven decisions, optimise development processes, and build software that is not only functional but also robust, scalable, and maintainable. This translates to reduced development costs, improved customer satisfaction, and a stronger competitive edge in the UK market.

The key takeaway here is that software quality metrics aren’t just numbers—they are vital tools for building better software. By implementing a robust system for tracking and analysing these metrics, you can ensure your projects stay on track, meet user expectations, and deliver a return on investment.

Ready to elevate the quality of your software? Iconcept Ltd, a premier provider of Laravel web development services, specialises in building high-quality, maintainable web applications. We leverage these essential software quality metrics throughout our development process, ensuring your project meets the highest standards of quality and performance. Visit Iconcept Ltd today to learn how our expertise in Laravel development and commitment to software quality can help you achieve your business goals.